Image Management Process

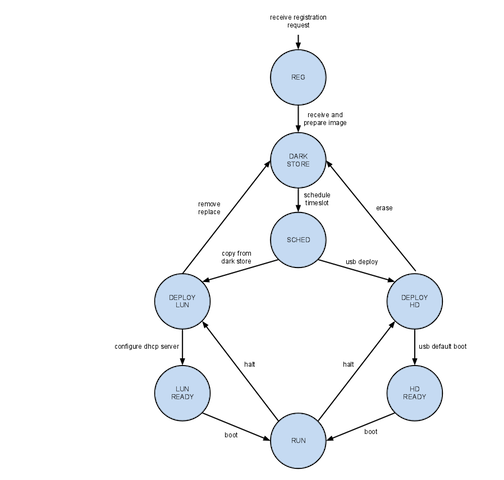

The following diagram shows a proposed state machine for the image management process. Note that the flow diverges to one of two paths depending on the type of node being deployed to (root node vs mesh node).

The following sections detail each of the states.

Project Registration (REG)

The process for scheduling time on centmesh is TBD. The idea is a researcher will contact Rudra or Mihai to ask about using the Centmesh testbed.

Dark Storage (DARK STORE)

The researcher will submit their image to OSCAR lab. The image will be prepared and validated to run on Centmesh hardware. Then the image will be stored in the dark storage system using clonezilla. Dark storage is a 6TB linux md installation on a white box PC. This machine exports an NFS share to one of the staging PCs in OSCAR lab. This storage is to be used as a repository for all images. The format of the images will be a 'clonezilla' format for easy storage and retrieval. Images will be stored in a 'project' directory - a simple two level tree.

Scheduled (SCHED)

After a researcher negotiates a timeslot to access the Centmesh testbed, the project is in the scheduled state. This schedule will be managed with a google calendar until there is a need for a more elaborate system. Also, a partition will be assigned - more on this below.

Deployed (DEPLOY)

The deployed state has two instances depending on the type of node the image is deployed to - DEPLOY LUN (root node) or DEPLOY HD (mesh node).

DEPLOY LUN

LUN storage is implemented in two iSCSI LUNs configured on the NetApp? 3070 in the OSCAR lab. Only one LUN is active at a time. The LUNs can be cloned to produce a unique LUN for each of the root nodes in the system. The inactive LUN will be used to stage the next image to be booted on the root nodes. This active storage system is an integral part of the image deployment scheme described above.

Root nodes will attach to an iSCSI LUN through the 1GbE connection to the NCSU network. The LUN resides on the NetApp? in the OSCAR lab. The boot process is as follows:

- BIOS is configured to PXE boot through its NIC connected to the NCSU network

- the NIC DHCPs

- DHCP serves IP address info and TFTP server

- NIC gets a gPXE image

- gPXE DHCPs

- DHCP serves the url for the iSCSI LUN

- gPXE hands off the boot process to the iSCSI LUN

DEPLOY HD

The deployed state has two instances depending on the type of node the image is deployed to - DEPLOY LUN (root node) or DEPLOY HD (mesh node).

Hard Drive Configuration

The hard drive partition scheme is as follows:

- (1GB ext3 /dev/sda1) the first primary partition is used to store the grub directory (more on grub later)

- (9GB swap /dev/sda2) the second primary partition is a common linux swap space

- (20GB ext3 /dev/sda3) the third primary partition is reserved for later use

- the fourth primary partition (/dev/sda4) is an extended partition that takes up the rest of the drive

- the next 21 partitions (20GB /dev/sda5 through /dev/sda25) are 'slots' used for deploying researcher images.

Grub Configuration

The boot process is as follows:

- When the PC boots, BIOS finds the hard drive. GRUB stage1 is loaded in the MBR (master boot record) along with the location of stage2 GRUB and grub.conf (the first partition - /dev/sda1).

- grub.conf in /dev/sda1 lists all the partitions with a chainloader command and a default partition to boot

- the boot process is passed to the first sector of the default partition listed in the /dev/sda1 grub.conf

- the grub process starts again from the stage1 GRUB in the first sector of this partition

- boot process follows however the partition installation is set up

Why do this?

- it's simpler - no need to stuff every boot detail in the first GRUB process

- it's flexible - fancy boot processes can be isolated in the partition

- easier to deploy - just copy all bits from a properly configured partition and then install grub in the first sector of the partition copied to.

Partition Assignment

There are 21 partitions that exist on the mesh nodes (not root nodes) which are used for storing and running researchers' images. These 21 partitions are /dev/sda5 through /dev/sda25 on the mesh nodes' local hard disk. Images are assigned to partitions and belong to a project which belongs to a researcher. If there are more than 21 images to manage, images must be copied from dark storage and deployed to a previously used partition. Slots assignments will be documented in this Centmesh wiki. The process for assigning partitions is TBD.

Ready (READY)

The deployed state has two instances depending on the type of node the image is deployed to - LUN READY (root node) or HD READY (mesh node).

LUN READY

An image in the LUN READY state means that the image is loaded in a LUN that is set to be booted on next boot for a root node. If the image is removed from the LUN, the image returns to the DARK STORE state.

HD READY

An image in the HD READY state means that the image is loaded in a partition of the HD of all mesh nodes and is set to be booted on next boot. If the image is removed from the HD, the image returns to the DARK STORE state.

Running (RUN)

An image in the RUN state means the image is running on all root and mesh nodes.

Centmesh Construction and Deployment

http://wiki.oscar.ncsu.edu/wiki/index.php/CentMesh_Deployment_Information

Attachments (1)

- imagestatemachinev3-small.png (63.5 KB) - added by jbass 4 years ago.

Download all attachments as: .zip